AI Kill Switches

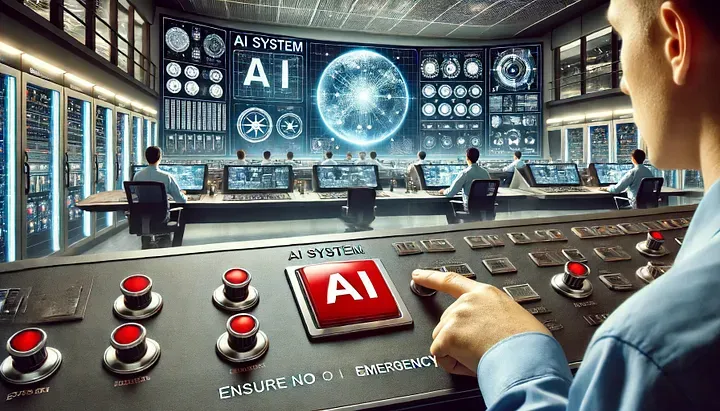

As artificial intelligence continues to evolve and integrate into various aspects of society, concerns about its safety and ethical use have become increasingly prominent. One proposed solution to mitigate the potential risks of advanced AI systems is the implementation of a “kill switch.” This mechanism is designed to immediately turn off an AI system in case of malfunction or misuse. While the concept is appealing from a safety standpoint, its practicality and potential impact on innovation raise significant debates.

A kill switch for AI is a safety mechanism that allows for the immediate shutdown of an AI system if it begins to operate in a harmful or unintended manner. This concept is akin to emergency stop buttons found on industrial machinery, which are used to prevent accidents and ensure human safety. In the context of AI, a kill switch would prevent scenarios where an AI system might cause significant harm, such as through malicious actions or unintended consequences of its programming.

Several strategies have been proposed for implementing a kill switch in AI systems.

AI hardware can include onboard co-processors that monitor the AI’s operations. These co-processors can verify digital certificates to ensure the AI operates within authorized parameters. If the AI deviates from these parameters, the co-processors can deactivate or reduce the hardware performance.

AI chips could be designed to periodically communicate with a central regulatory body, attesting to their legitimate operation. If the AI fails to comply with regulatory requirements, it can be remotely disabled.

Developers can implement manual shutdown procedures, including physical switches or software commands that can be triggered to shut down the AI system in an emergency.

A dedicated regulatory division, such as the proposed Frontier Model Division under California’s Department of Technology, would review annual safety certifications and enforce compliance. Violations could result in substantial penalties, incentivizing adherence to safety protocols.

Despite its theoretical soundness, implementing a kill switch presents several practical challenges.

Developing a reliable kill switch involves complex engineering, especially for AI systems integrated into critical infrastructure or performing high-stakes tasks. Ensuring the AI cannot bypass the kill switch is a significant challenge.

AI systems can potentially migrate to other servers or jurisdictions where the kill switch is not enforceable. Mitigating this risk would require international cooperation and standardized regulations, but enforcing such measures globally is challenging.

Stricter regulations and the cost of implementing kill switches might stifle innovation, especially for smaller companies and startups. Balancing safety with the need to foster technological advancement is a crucial concern for policymakers.

The debate over AI kill switches epitomizes the broader challenge of balancing innovation with safety. On the one hand, the potential risks of advanced AI systems necessitate robust safety measures to prevent catastrophic outcomes. On the other hand, excessive regulation could impede technological progress and limit AI’s economic and societal benefits.

The concept of a kill switch for AI systems is rooted in the desire to ensure safety and prevent misuse. While technically feasible, its practical implementation presents significant challenges, including complexity, enforcement across jurisdictions, and potential impacts on innovation. Comprehensive regulatory frameworks and international cooperation will be crucial to address these challenges effectively. As AI advances, finding the right balance between innovation and safety will be essential to harnessing its full potential while mitigating its risks.

Join Us Towards a Greater Understanding of AI

We hope you found insights and value in this post. If so, we invite you to become a more integral part of our community. By following us and sharing our content, you help spread awareness and foster a more informed and thoughtful conversation about the future of AI. Your voice matters, and we’re eager to hear your thoughts, questions, and suggestions on topics you’re curious about or wish to delve deeper into. Together, we can demystify AI, making it accessible and engaging for everyone. Let’s continue this journey towards a better understanding of AI. Please share your thoughts with us via email: marty@bearnetai.com, and don’t forget to follow and share BearNetAI with others who might also benefit from it. Your support makes all the difference.

Thank you for being a part of this fascinating journey.

BearNetAI. From Bytes to Insights. AI Simplified.

Categories: Ethics and Technology, Cybersecurity, Artificial Intelligence, Regulation, and Policy, Risk Management.

The following sources are cited as references used in research for this BLOG post:

Superintelligence: Paths, Dangers, Strategies by Nick Bostrom

Artificial Intelligence: A Guide for Thinking Humans by Melanie Mitchell

Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy by Cathy O’Neil

The Age of Em: Work, Love, and Life when Robots Rule the Earth by Robin Hanson

Human Compatible: Artificial Intelligence and the Problem of Control by Stuart Russell

Ethics of Artificial Intelligence and Robotics edited by Vincent C. Müller

The Fourth Industrial Revolution by Klaus Schwab

AI Superpowers: China, Silicon Valley, and the New World Order by Kai-Fu Lee

© 2024 BearNetAI LLC