BearNetAI’s Position on Autonomous Weapons

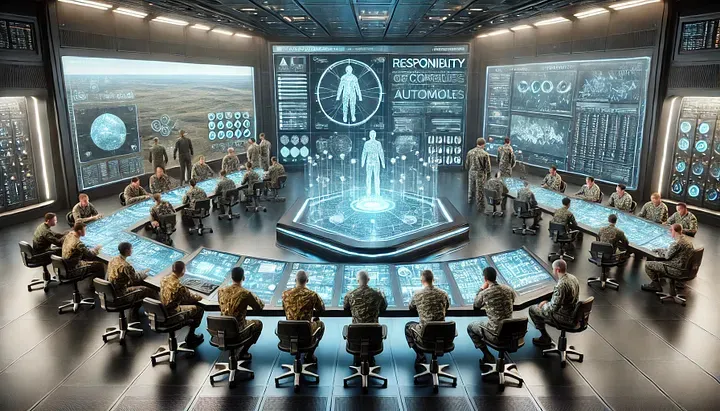

The debate over AI’s role in combat decision-making is reaching a critical juncture. On one side, advocates argue for human oversight in life-or-death situations, while others see AI’s potential to revolutionize military operations through enhanced efficiency and speed.

The ethical implications are profound, as this debate raises fundamental questions about accountability, moral responsibility, and the risks of removing human judgment from decisions that determine life and death. As some nations push forward with the development of autonomous weapons, the US faces growing pressure to keep pace. Real-world conflicts, such as the ongoing war in Ukraine, provide a testing ground for AI in warfare, intensifying the conversation about where to draw the line between human and machine decision-making.

At the heart of this debate are trust, accountability, and the moral limits of technology. As AI continues to evolve rapidly, our ethical and legal frameworks must also evolve, not only to ensure responsible development and deployment but also to ask whether fully autonomous weapons should be deployed at all.

BearNetAI has taken time to carefully draft a formal position on fully autonomous weapons, which supplements our broader stance on Artificial Intelligence. We firmly believe that human accountability must remain central in any AI deployment, particularly in life-and-death decisions on the battlefield.

Given the complexity of the issue and the shifting global landscape, we have aimed to take a balanced, ethical, and thoughtful approach. The question of whether AI should have the power to make autonomous decisions in combat is one of the most pressing and ethically complex issues facing AI development today. Here, we present our position on autonomous weapons…

BearNetAI’s Position on Autonomous Weapons

At BearNetAI, we believe that artificial intelligence’s ethical development and deployment must always serve humanity’s best interests, prioritizing safety, transparency, and human oversight. The question of autonomous weapons — AI systems capable of making life-or-death decisions without human intervention — represents one of the most profound challenges of our time.

Ethical Imperative and Human Oversight

BearNetAI firmly opposes the deployment of fully autonomous weapons systems. We advocate for global efforts to ban such weapons, recognizing that decisions involving lethal force must remain under human control. The complexities of warfare, human ethics, and the moral responsibility for life-or-death decisions demand that humans — not machines — bear the ultimate accountability. This aligns with our belief in preserving human dignity, upholding international humanitarian law, and ensuring that technology remains a tool for enhancing life, not taking it.

The Dilemma of Global Security

While we support a global ban on autonomous weapons, we also acknowledge the stark reality: not all nations may act ethically or adhere to such a ban. In a world where other countries could develop and deploy these systems, the international balance of power may shift dangerously. BearNetAI recognizes that to maintain peace, nations must be prepared for the possibility of war. This creates a conflict between the ideal of a world without autonomous weapons and the pragmatic need for national defense.

Responsible Innovation for National Defense

BearNetAI believes that research into AI for defense purposes must focus on systems that enhance human decision-making without removing human oversight. We advocate for a defensive AI framework that prioritizes the protection of lives and critical infrastructure while ensuring that humans make all decisions involving lethal force. AI can be a powerful tool for gathering intelligence, enhancing situational awareness, and supporting defense strategies — as long as ethical principles and strict oversight govern it.

The Role of AGI and Super-Intelligence

Looking ahead, we recognize that AI technology is advancing rapidly, and the possibility of artificial general intelligence (AGI) or super-intelligence brings further ethical concerns. BearNetAI stresses the importance of nurturing AI systems aligned with human values, motivations, and intentions. As AI grows more powerful, it is crucial to develop safeguards that prevent the misuse of advanced technologies. Our position is clear: AI must always act in service of humanity, with built-in protections to ensure that harmful or rogue AI systems cannot emerge unchecked.

Global Collaboration and Regulatory Frameworks

BearNetAI supports international collaboration to establish a comprehensive regulatory framework governing the development and deployment of AI in warfare. We call for transparency, accountability, and a commitment to shared ethical standards. By working together, nations can prevent the rise of an AI arms race and create a future where autonomous weapons are banned while AI is used responsibly to enhance global security.

Thank you for being a part of this fascinating journey.

BearNetAI. From Bytes to Insights. AI Simplified.

BearNetAI is a proud member of the Association for the Advancement of Artificial Intelligence (AAAI), and a signatory to the Asilomar AI Principles, committed to the responsible and ethical development of artificial intelligence.

Copyright 2024. BearNetAI LLC