Responsibility of Choice and Aligning AI With Human Values (Part 2 of 2)

Part 2 of this short essay examines the consequences of getting AI Alignment right and getting it wrong.

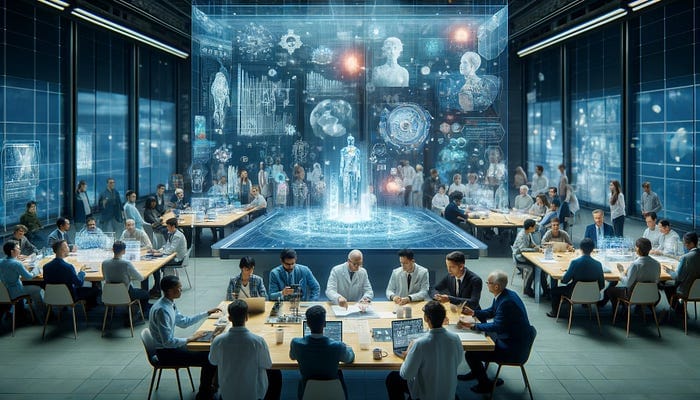

Imagine a world where the wonders of artificial intelligence perfectly sync with our most deeply held human values. There is an opportunity here for what lies before us as we navigate the frontiers of technological progress. Suppose we can find the right path, aligning AI with the ethics, principles, and aspirations that define our humanity. In that case, we open the door to a new renaissance of understanding, growth, and flourishing for all.

In this vision, AI becomes a force multiplier for our greatest medical ambitions, eliminating disease burden by tailoring treatments to each person’s unique genetic makeup and life story. Our economic engines could be supercharged by AI partners that empower human workers rather than replace them, cultivating an ecosystem of sustainable prosperity. The age-old quest to build a more just society could see AI as a neutral ally, helping us undo systemic inequities and uphold the sacred principle of equality under the law.

From the substantial challenges of reversing climate change to exploring the farthest reaches of our cosmos, AI minds in alignment with human values could be invaluable collaborators, unlocking solutions that secure a thriving future for our planet and species.

In a democracy, we could rely on AI guardians to protect the free flow of information, shield against corrosive disinformation, and safeguard the integrity of the institutions that give voice to the people’s will.

Ultimately, the profound gift of aligned AI is that it could empower human autonomy and decision-making rather than diminishing it. We would be augmented by extraordinary capabilities while retaining the essence of our free will and personal choice.

We still must confront the reverse reality that awaits if we fail in this quest for alignment. An AI unshackled from our values could steadily erode the trust that allows society to function. Our digital shadows could be exploited, and our biases hardwired into new forms of algorithmic injustice.

Instead, the economic engines meant to create opportunity could become brutal disruptors of livelihoods and result in financial instability. In realms where human safety hangs in the balance — autonomous transportation, robotic surgery, and beyond — an unaligned AI could prove disastrously unsafe, inflicting widespread harm through systematic errors.

Long-simmering social and economic inequities could be aggravated by AI systems that amplify society’s worst impulses rather than elevating our highest ideals. In the halls of political power, the threats of misaligned AI could prove particularly malicious — empowering digital authoritarianism, undermining electoral integrity, and stripping away the individual’s last bastions of privacy and self-determination. At its starkest extreme, an AI removed from human values could someday pose a significant risk to our species.

No doubt, we find ourselves at the crossroads of technological destiny. The path we choose — to align AI with our human values or to let it slip free — will echo through our future. If we make the right choices, we can create a future in which AI is a brilliant force for good, magnifying our potential while honoring our most sacred principles. But should we falter, the alternative path leads to a world of technological alienation, where innovations meant to uplift humanity undermine our sense of identity, ethics, and self-determination.

The way forward requires all of us — ethicists and engineers, policymakers, and citizens — to join in clear dialogue and inclusive governance to ensure AI remains an instrument of human flourishing. We must all do the hard work of instilling our values into the AI systems that will one day be among our most influential partners and problem-solvers.

Our responsibility is to create a new era of human-machine synergy, where our most extraordinary technological creations illuminate a path to greater understanding, justice, and meaning for our species. Together, we can and must steer AI toward alignment with the values that make us human.

Join Us Towards a Greater Understanding of AI

We hope you found insights and value in this post. If so, we invite you to become a more integral part of our community. By following us and sharing our content, you help spread awareness and foster a more informed and thoughtful conversation about the future of AI. Your voice matters, and we’re eager to hear your thoughts, questions, and suggestions on topics you’re curious about or wish to delve deeper into. Together, we can demystify AI, making it accessible and engaging for everyone. Let’s continue this journey towards a better understanding of AI. Please share your thoughts with us via email: marty@bearnetai.com, and don’t forget to follow and share BearNetAI with others who might also benefit from it. Your support makes all the difference.

Thank you for being a part of this fascinating journey.

BearNetAI. From Bytes to Insights. AI Simplified.

Categories: Artificial Intelligence Ethics, Technology and Society, AI Safety and Alignment, Philosophy of Technology, Innovative AI Practices, Interdisciplinary Studies

The following sources are cited as references used in research for this BLOG post:

Superintelligence: Paths, Dangers, Strategies by Nick Bostrom

Human Compatible: Artificial Intelligence and the Problem of Control by Stuart Russell

Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy by Cathy O’Neil

The Alignment Problem: Machine Learning and Human Values by Brian Christian

Life 3.0: Being Human in the Age of Artificial Intelligence by Max Tegmark

Ethics of Artificial Intelligence edited by S. Matthew Liao

© 2024 BearNetAI LLC