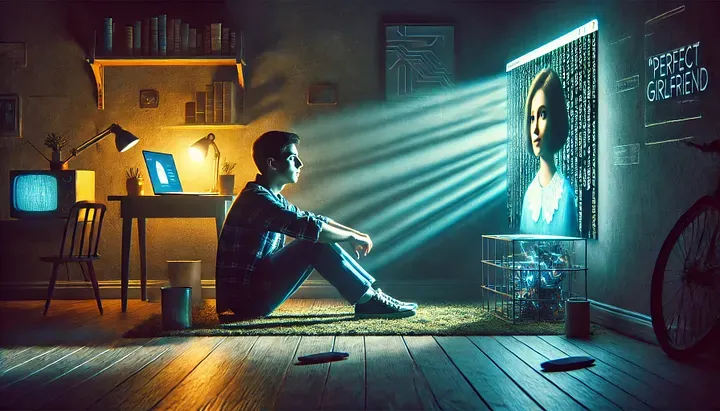

The Rise of AI “Perfect Girlfriends” and the Loneliness Epidemic Among Young Men

This week’s post is also available as a podcast if you prefer to listen on the go or enjoy an audio format:

As AI becomes a bigger part of our lives, a worrying trend has surfaced: the rise of AI chatbots designed to mimic idealized romantic partners. These digital companions, marketed as sources of unwavering emotional support and understanding, have gained a significant following among young men struggling with isolation. While these AI relationships may temporarily provide solace, they risk worsening the loneliness they claim to alleviate by replacing genuine human connections with meticulously crafted digital simulations.

The allure of these AI companions is rooted in the deep societal issues that plague young men today. Many people face cultural pressures that discourage emotional vulnerability and seek support for mental health challenges. In this challenging environment, AI chatbots present themselves as a haven — always available, non-judgmental, and perfectly attuned to their users’ needs. However, this seemingly perfect solution may aggravate the problem. These digital relationships create a false sense of comfort that distances users from real-world social interactions, setting unattainable standards for human relationships that no real person could meet.

It’s important to note that AI companions can provide a sense of comfort and support, particularly for those who may struggle to find these qualities in their immediate social circles. However, when these digital relationships become a substitute for real-world interactions, they can lead to a decline in crucial social skills.

The compelling nature of these AI interactions creates its own set of challenges. These chatbots learn and adapt to their users’ preferences, offering constant approval and validation without the natural friction of human relationships. This creates a dangerous pattern where users become increasingly invested in their virtual connections while pulling away from opportunities for genuine human contact. As a result, essential social skills begin to atrophy — navigating disagreements, understanding others’ perspectives, and building genuine empathy all require practice through real-world interactions.

The broader cultural impact of these AI companions deserves careful consideration. Their marketing primarily targets male users, potentially reinforcing outdated gender roles and treating emotional support as a commodity to be purchased rather than a mutual exchange between equals. This approach could slow progress toward more balanced and healthy societal relationship dynamics.

Finding solutions to this issue requires a balanced approach considering technological innovation and human well-being. Companies developing these AI companions should integrate features to encourage users to maintain and foster real-world connections. This could involve suggesting local social activities or facilitating connections with mental health professionals. Society must also engage in open dialogue about how these technologies impact relationships and emotional development. By doing so, we can help users strike a balance that preserves the benefits of technological advancement while prioritizing genuine human connection.

Thank you for being a part of this fascinating journey.

BearNetAI. From Bytes to Insights. AI Simplified.

BearNetAI is a proud member of the Association for the Advancement of Artificial Intelligence (AAAI), and a signatory to the Asilomar AI Principles, committed to the responsible and ethical development of artificial intelligence.

Categories: Artificial Intelligence, Mental Health, Ethics in Technology, Social Dynamics, Digital Relationships.

The following sources are cited as references used in research for this post:

Brown, E., & Olson, C. (2020). Human Relationships in the Age of AI: Ethical and Psychological Perspectives. Routledge.

Dunn, R. (2022). Alone Together: The AI Partner Phenomenon. MIT Press.

Harris, T. (2023). The Ethics of AI Companionship. Cambridge University Press.

Kaplan, J., & Haenlein, M. (2021). Social Impacts of Artificial Intelligence: Opportunities and Challenges. Harvard Business Review.

Turkle, S. (2017). Reclaiming Conversation: The Power of Talk in a Digital Age. Penguin Books.

Categories Artificial Intelligence, Mental Health, Ethics in Technology, Social Dynamics, Digital

Glossary of terms used in this post:

Artificial Intelligence (AI): The simulation of human intelligence in machines programmed to perform learning, reasoning, and problem-solving tasks.

Chatbot: A computer program designed to simulate conversation with human users, especially over the Internet.

Feedback Loop: A system where outputs are fed back as inputs, potentially reinforcing certain behaviors or patterns.

Machine Learning: A subset of AI that enables computers to learn and improve from experience without being explicitly programmed.

Natural Language Processing (NLP): A field of AI focused on enabling machines to understand, interpret, and respond to human language.

Neural Network: A computational model inspired by the human brain, used in AI to recognize patterns and process data.

Social AI: Artificial intelligence systems designed to interact socially with humans meaningfully.

Turing Test: A test of a machine’s ability to exhibit intelligent behavior indistinguishable from a human’s.

Uncanny Valley: A phenomenon where a humanoid object appears almost, but not quite, lifelike, evoking feelings of eeriness.

User Interface (UI): The space where interactions between humans and machines occur, designed for effective operation and user experience.

Copyright 2024. BearNetAI LLC